Technical resources

Using Jenkins CI/CD for Container Certification

Jenkins integrations for enabling CI/CD within the Red Hat partner ecosystem can be useful as Jenkins is a popular CI/CD project of choice among partners at this time. This post will focus on Jenkins integrations exclusively and is for partners in the Red Hat ecosystem using CI/CD for container certification automation. The purpose here does not include generic best practices for Jenkins servers. Official Jenkins resources and best practices should be consulted for implementing proper production deployment, usage, and secure operation of Jenkins.

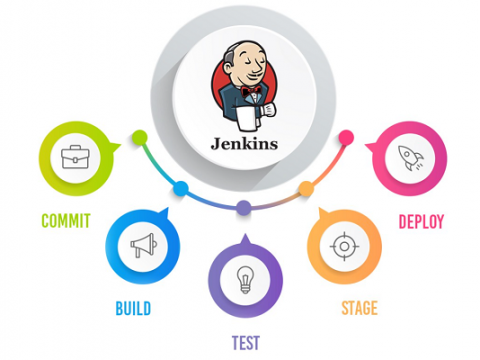

What is Jenkins?

Jenkins is a leading open source automation server for software development projects. It is an automation server, and it enables continuous integration and continuous delivery (CI/CD) by automating phases of software development including building, testing, and deployment. Jenkins is extensible and allows for numerous integrations through its plugin architecture. Several best practices within this post use this plugin functionality to achieve the desired integrations.

Jenkins Server

For the purpose of this post, let’s assume a Jenkins server is in use. If Jenkins is not already installed, refer to the Jenkins documentation for all installation options. Jenkins can also be deployed on Red Hat OpenShift by referencing and following the posts here.

Additionally, the enumerated Jenkins plugins here are required for the integrations described within this post. These can be installed with the Jenkins server’s Plugin Manager.

- Pipeline

- Docker Pipeline

- Git

- GitHub

Jenkins Pipeline

A Jenkins pipeline is required for the integrations presented here. The procedure described in this post can be used as a reference to create a pipeline that is compatible with the suggested integrations. First however, it is important to note the recommendation for the configuration and application files used throughout the Jenkins deployment:

Leading [Recommendation] Add Jenkinsfiles and Dockerfiles to Source Code Management (SCM)

Explanation

Building images cleanly from source produces the most reliable builds. This also ensures that builds can be readily reproduced. Storage of source artifacts is also centralized and managed.

Implementation

As an example in this post, the Jenkinsfile configuration file for the Jenkins pipeline and the Dockerfile defining the application image are stored in a git repository. This post will utilize GitHub for this purpose.

Creating a Jenkins Pipeline

- From the Jenkins Dashboard select New Item and create a Pipeline.

- In the General tab, in the Build Triggers section check the Poll SCM checkbox. This option configures Jenkins to actively check the repository for a Jenkinsfile and update the pipeline configuration if the repository is updated according to the defined Schedule. The Schedule textbox accepts input in a modified cron format. Entering ‘H * * * * ‘ would set the schedule to hourly for example. Later on in this post, webhook triggers will be recommended over polling triggers. Polling is only used here as a starting point and because not all networks permit webhook interaction.

- In the Pipeline section, set Definition to Pipeline script from SCM. Set SCM to Git. In the Repositories subsection, set Repository URL to the Github repository.

- If necessary, in the Pipeline section, in the Branches to build subsection, set the desired branch of the repository in Branch Specifier.

For Red Hat partners pursuing container certification, this recommendation applies:

[Recommendation] Create one Jenkins pipeline per Red Hat Connect project

Explanation

Each container certification project in Red Hat Connect corresponds to one container image. It is required for image certification scanning that a tag, unique to each project, is applied to each image submitted for certification scanning. Since pipeline builds are configured per each git repository, then only one image should be in scope per pipeline.

Implementation

Refer to the steps for creating a Jenkins pipeline and modify the Repository URL accordingly.

Jenkinsfile Configuration

For purposes of demonstrating the different Jenkins integrations below, this simple Jenkinsfile configuration will be used to reference a basic Jenkins pipeline.

This pipeline consists of the following four stages:

- Cloning a code repository

- Building a container image from the Dockerfile within that repository

- Testing the built container image

- Pushing the image to a container registry

Clone Stage

This stage of the pipeline checks out the Jenkinsfile configuration for the pipeline below per the defined schedule.

Jenkinsfile

node {

def app

// Clone Stage

stage('Clone repository') {

checkout scm

}

// Build Stage

stage('Build image') {

app = docker.build("myrepo/mytag:${env.BUILD_NUMBER}")

}

// Test Stage

stage('Test image') {

app.inside {

sh 'echo "Tests passed"'

}

}

// Push Stage

stage('Push image') {

app.push("${env.BUILD_NUMBER}")

}

}

While the sample Pipeline here relies on a scheduled check for new configurations to trigger a pipeline build, a more automated trigger should be used according to this best practice:

[Recommendation] SCM should trigger pipeline execution when new or updated source code is pushed

Explanation

Instead of having Jenkins blindly checking source repositories repeatedly for new code to trigger a pipeline execution, SCM should only reach out to Jenkins and execute the pipeline when new code is pushed to a repository. This solution is more efficient since builds can be instantly triggered. No time is spent waiting for the pipeline to execute and no unnecessary network requests are generated.

Implementation

Since this example uses GitHub for SCM, webhooks will be used to contact Jenkins and execute a pipeline build when code in the git repository changes. This requires that the Jenkins server be accessible from the Internet by GitHub. Routing and access control changes may be required to accomplish this and are outside the scope of this post.

For this example, these configurations must be performed to connect GitHub to Jenkins via webhook:

GitHub

- From the repository, navigate to Settings, Webhooks, and click Add webhook.

- For Repository URL, add the address of the Jenkins server plus /github-webhook/ added to the end. I.e.: https://jenkins-server.com:8080/github-webhook/

- Change Content type to application/json.

- The default trigger of Just the push event is sufficient for this example.

- Ensure the Active checkbox is checked and click Add webhook.

Jenkins

- In the General tab, in the Build Triggers section uncheck the Poll SCM checkbox. This is only necessary if SCM Polling was configured above.

- Check the box for GitHub hook trigger for GITScm polling and Save.

Build Stage

This stage requires that Docker is installed wherever the Jenkins server is hosted. Docker is utilized to build a container image according to the Dockerfile stored in the git repository. The image that is built is tagged as repository/tag:#.

I.e.: myrepo/mytag:1

For Red Hat partners pursuing container certification, this recommendation applies:

[Recommendation] Tag images according to Red Hat Connect project

Explanation

Before submitting container images to Red Hat Connect for certification scanning, they must be tagged so that they are associated with the correct project in the certification registry. Automatically tagging images with a stage in the Jenkins pipeline removes the need to tag images manually and reduces the possibility for introducing error.

Implementation

For this example, the certification project’s unique tag must be collected from Connect and added to the Build stage in the Jenkins pipeline.

Red Hat Connect

- Navigate to the project associated with the image being built in this pipeline in Connect: https://connect.redhat.com/projects/.

- From the Images tab, copy only the project ID value which includes ‘ospid-’ from the Tag Your Container section.

I.e.: ospid-abcdef12-3456-7890-abcd-ef12345678ab

Jenkins

Modify the Build stage of the Jenkinsfile to include the Connect project’s tag:

stage('Build image') {

app = docker.build("ospid-abcdef12-3456-7890-abcd-ef1234567890/ubi8-httpd:${env.BUILD_NUMBER}")

}

Test Stage

In this stage, note that a basic, dummy test is performed. Remember that this pipeline is not representative of a production pipeline and is being used to highlight best practice integrations. Actual partner pipelines are likely to be more sophisticated with multiple steps and stages for testing and advanced logic.

For Red Hat partners pursuing container certification, this recommendation applies:

[Recommendation] Automatically test for Red Hat container certification requirements

Explanation

Testing that container images meet certification requirements before being submitted to Red Hat Connect for certification scanning improves efficiency. Timely servicing of image scanning is not always guaranteed by Red Hat’s Container Factory pipeline and images should be tested to ensure certification compliance before being submitted for scanning.

Implementation

Partners should reference the Container Certification Policy Guide and design tests according to the policies documented there.

Push Stage

For this stage a generic Docker Hub push is provided. Two best practices can be recommended for this stage:

[Recommendation] Automatically push images to dedicated registry

Explanation

Images built and tested with Jenkins should be stored elsewhere and not on the Jenkins server. This principle of least functionality enhances the security, scalability, and performance of the architecture. The partner should also not rely solely on Red Hat Connect or the Red Hat Container Catalog for storing images. Any applicable requirements for business continuity compliance should be referenced to further shape this best practice.

Implementation

For this example, the Jenkins pipeline will push the container image to the Quay.io registry. This requires an available repository on Quay.io, the Quay.io credentials, and an update to the Jenkinsfile:

- From the Jenkins Dashboard, navigate to Manage Jenkins, Manage Credentials, the global Jenkins store, and click Add Credentials.

- Enter the Username and Password for Quay.io, name this credential with an ID, and click Save.

- Modify the Push stage of the Jenkinsfile to push to Quay.io. Here the ID for the credential is quay-credentials.

stage('Push image') {

docker.withRegistry('https://quay.io', 'quay-credentials') {

app.push("${env.BUILD_NUMBER}")

}

For Red Hat partners pursuing container certification, this recommendation applies:

[Recommendation] Automatically push images to Red Hat Connect for scanning

Explanation

Images that would normally be manually pushed to Red Hat Connect for certification scanning should be automatically pushed to improve efficiency.

Implementation

For this example, the Jenkins pipeline will push the container image to the Connect registry. This requires an existing project on Red Hat Connect, the Connect project’s registry key, and an update to the Jenkinsfile:

Red Hat Connect

- Navigate to the project associated with the image being pushed in this pipeline in Connect: https://connect.redhat.com/projects/.

- From the Images tab, copy the registry key from the View Registry Key section.

Jenkins

- From the Jenkins Dashboard, navigate to Manage Jenkins, Manage Credentials, the global Jenkins store, and click Add Credentials.

- Enter the Username ‘unused’ and the registry key from above, name this credential with an ID, and click Save.

- Modify the Push stage of the Jenkinsfile to push to Red Hat Connect. Here the ID for the credential is connect-credentials.

stage('Push image') {

docker.withRegistry('https://scan.connect.redhat.com', 'connect-credentials') {

app.push("${env.BUILD_NUMBER}")

}

}

Conclusion

What we have documented are several useful Jenkins integrations that partners can use to enable CI/CD within their workflows. Partners pursuing Red Hat container certification can use these best practices to achieve better automation in releasing certified container applications.

Are you interested in becoming a Red Hat partner and adopting the OpenShift platform for your application? If you have questions, please submit them to our virtual Partner Success Desk.

References & Learning More