Technical resources

Introduction to the Red Hat Distributed Continuous Integration process

What does Red Hat’s CI process look like from a partner perspective? Let’s examine the key concepts and workflow.

Red Hat is well-known for providing infrastructure software like Red Hat Enterprise Linux, Red Hat OpenShift or Red Hat OpenStack. These are established technologies for our customers and also for our partners. In order to keep the software as stable as possible, Red Hat works on doing various quality assurance (QA) and continuous integration (CI) processes. In looking at the CI workflow specifically, we will get into what that looks like from a partner’s perspective.

The Red Hat’s partner use case

There are multiple kinds of partner companies. The main kinds are what we call “hardware vendors” who are selling specific hardware. In the IT market, for instance, we see companies that sell servers, networking components, hardware storage solutions, etc. There is also the Independent Software Vendor (ISV) who is selling specific software solutions in various areas.

The common denominator between all of these companies is that they want to minimize the number of bugs that occur when it comes to integrating their stack with Red Hat’s. To do so, the overarching goal is to start this integration as early as possible. Ideally, it should begin before the products (on both sides) are released to general availability (GA). This is typically the use case when the Red Hat Distributed Continuous Integration (DCI) workflow comes into play.

The DCI workflow

The main idea behind DCI is to integrate and test together multiple pre-release software packages in order to be predictive (which software combos are working correctly?) and provide useful insights for both Red Hat and the partner. To do so, Red Hat engineers and the partner’s engineers collaborate together for the full integration, and, once this is done, the DCI automation might replay the process as new unreleased software is pushed.

Another very important benefit of such a workflow is that all these tests take place inside partners' walls with their own specific hardware and configuration. This allows Red Hat to reduce part of the CI cost and to use the partner’s knowledge of their stack (partners are their own subject matter experts with their own software!) thus avoiding a consulting or learning curve.

Let’s explore how all these concepts are articulated.

The DCI concepts

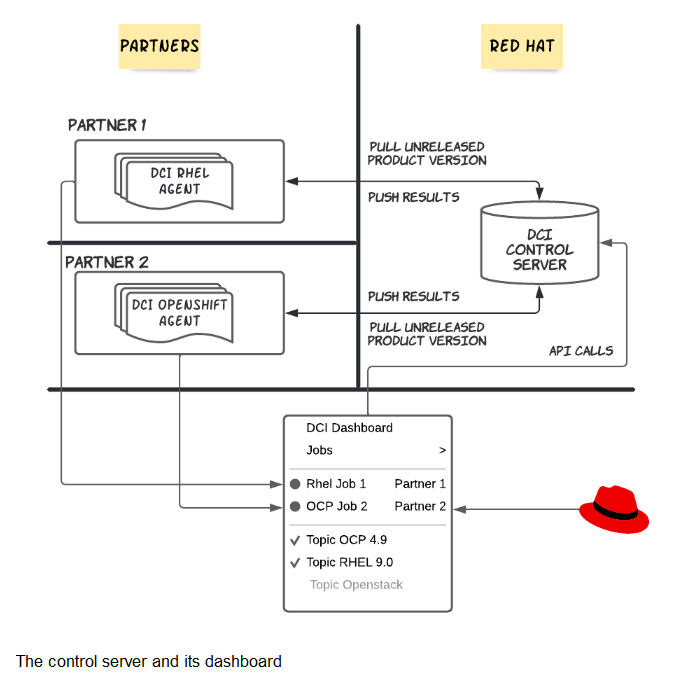

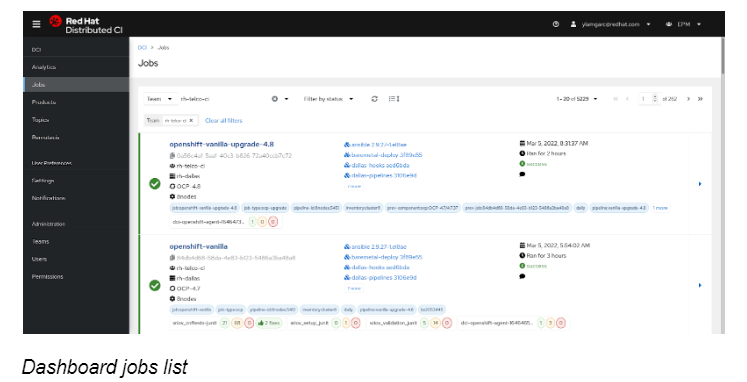

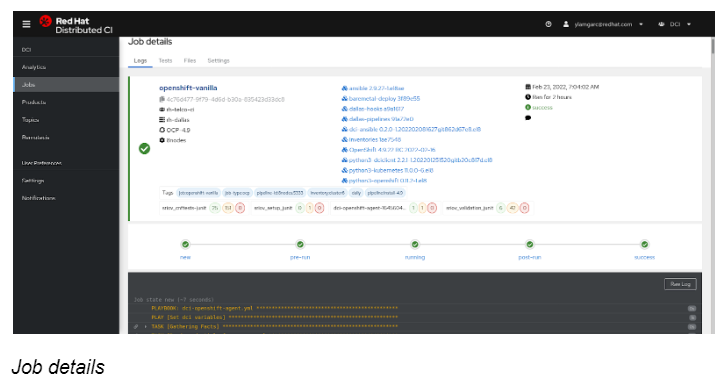

As shown above, you can see we decided to centralize all the partner’s interactions in a unique place called the Control Server. It’s a multi-tenant RESTful API that provides the necessary resources to run the CI jobs. The dashboard is the main user interface for the partners to visualize their jobs results. This is what it looks like:

When partners are onboarded, they are first invited to sign in with their Red Hat account through the dashboard, and the first login will automatically create a partner account in the DCI database. An administrator may then add the partner’s account into a team. This is a very important step because by creating one team per partner, we isolate one partner from another (partners must not see each others’ activities).

What is the difference between an agent and a remote ci?

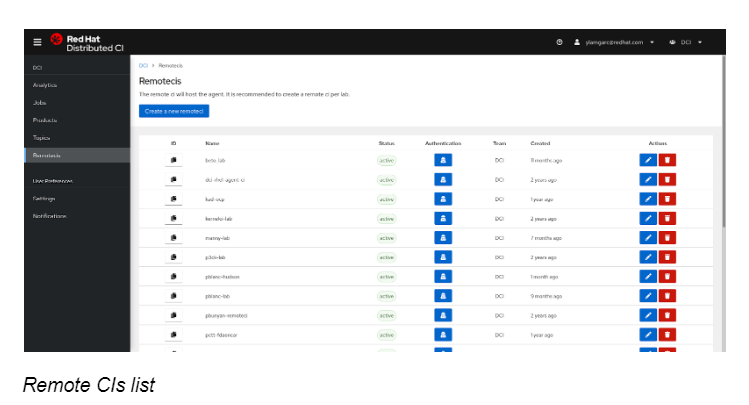

Once the partner is attached to his team, the first thing their will want to do is to run a job. But before doing so, he must create what we call a “remote ci”. A remote ci is how we identify a partner platform. In fact, when a job is created in the Control Server, the remote ci should clearly identify where it has been run. This is an important piece of information because a partner might have multiple platforms with different hardware configurations.

In practice, a remote ci is acting like a “user” with its own authentication mechanism and its credentials are used by the agent to interact with the control server’s API. So what then is a DCI agent?

A DCI agent is in charge of effectively running the job on the partner’s platform and reporting back to the Control Server. Red Hat offers one agent per product and we support OpenShift, RHEL and Openstack.

All agents are Ansible-based and they drive their respective Red Hat installers to deploy the product on the partner’s platform. The plumbing from the agent to the Control Server is done transparently thanks to a specific Ansible callback plugin. A mechanism based on “hooks” gives the opportunity to the partner to plug in their specific tasks during the run of a job, and this way it’s possible to easily customize the deployment for their specific needs.

What is a job and why are components so important ?

A job is the representation of the output of an agent through a given remote ci as pictured here.

The job is at the center of all DCI concepts and is composed of several artifacts. When a job is running we can split its progression into several steps and each step is called a Jobstate. A job does the following:

- new: schedule a job

- pre-run: prepare the environment

- pre-run: download the required artifacts

- running: deploy the product

- running: run some tests

- post-run: finally upload some logs and tests results

- success, failure, error: final status

The benefits of having such a taxonomy is that it eases the post-mortem analysis and allows you to have various measurements during each step to detect anomalies. Also, analytics tools might be pluggable on top of this mechanism.

A job always runs against something that you’re interested in. For example, in RHEL it could be an unreleased Compose, for Openshift it could be a nightly version, etc. All these kinds of artifacts are called components. More specifically, the components represent everything that is related to the context of executing a job and help to understand the job’s results.

Components list

In the CI world, the key ingredient for success is repeatability. Indeed, when a job is failing we want to understand why, and more importantly we want to be able to reproduce this failure. To do so, the job can specify as many components as required and usually the agents can explicitly list dependencies to be added as components in the job.

In Red Hat’s Ansible-based agents, we enforce this practice with a special action plugin to automatically create components when some repos are cloned.

Lastly, the latest reported artifact is what’s called a file. It could be a RHEL sosreport, an OpenShift must-gather tarball, service logs, etc.

Choosing a specific version stream with a topic

So we’ve seen how components are related to jobs, but how are components sorted out? How do you run a job with a specific version of a product? This is the goal of the DCI Topics.

A product is usually released under a specific version, this version is following a schema, the most commonly used is semver that follow the nomenclature “MAJOR.MINOR.PATCH”, for example OpenShift 4.9.1. In DCI, we provide a stream for each “MAJOR.MINOR”, for example OpenShift 4.9, RHEL 8.4, etc. These streams are called topics and a team can subscribe to topics of interest depending on their needs.

When an agent asks the Control Server to schedule a job, it must provide the topic on which to represent the job. By default the server will get the latest version available for this topic, for example in the topic OpenShift 4.9, it would be OpenShift 4.9.23. For various reasons, some partners might want to pin a component, for instance to reproduce a bug. In this case the agent adds the component identifier along with the topic. This is easily configurable in the agent’s settings.

Conclusion

We have reviewed the Distributed CI concepts to understand how Red Hat is able to extend their CI outside of our walls up to the partner’s platforms. With DCI, Red Hat might integrate the partner's stack with un-released Red Hat products, thus improving the global testing coverage and be prepared as much as possible for the GA release of a particular piece of software.

In this area we can go even further. DCI promotes and eases best CI practices for the partners like continuous certification. This will allow the partners to be sure they are constantly Red Hat Certified and ready for GA, thus reducing partner’s time to market.

To test complex scenarios, DCI also provides a complete pipeline tooling for orchestrating such jobs! We hope to cover all these features in future articles, so stay tuned.

https://docs.distributed-ci.io

distributed-ci@redhat.com